Developer, innovator, life-long student. Computer Science graduate student at @DePaulU. VP/COO @bnonews, now engineering and coding for good.

260 posts

Latest Posts by techyminute - Page 9

Making AI systems that see the world as humans do

A Northwestern University team developed a new computational model that performs at human levels on a standard intelligence test. This work is an important step toward making artificial intelligence systems that see and understand the world as humans do.

“The model performs in the 75th percentile for American adults, making it better than average,” said Northwestern Engineering’s Ken Forbus. “The problems that are hard for people are also hard for the model, providing additional evidence that its operation is capturing some important properties of human cognition.”

Keep reading

Amazing video on artificial intelligence! Could it be OUR worst doomsday scenario?

I’ve been working on this video for ages. I think it’s probably the most important issue to know about right now.

#Google #AI

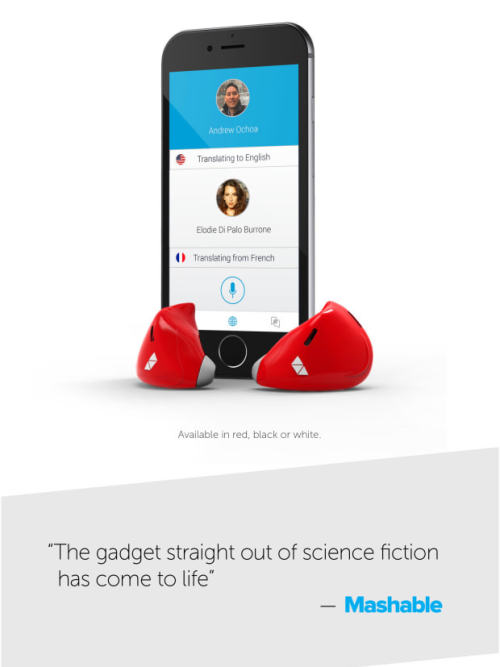

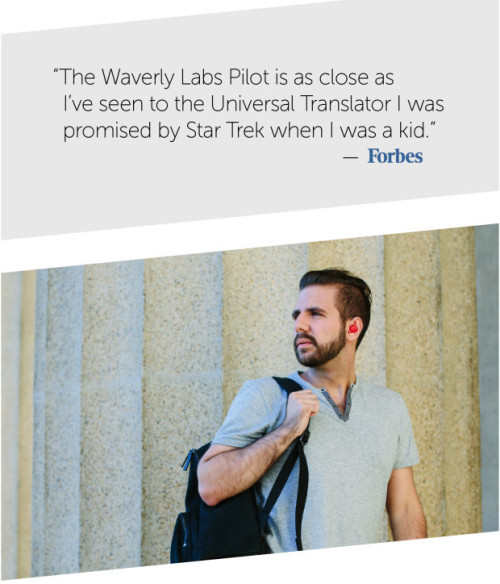

Pilot, the real-time universal translator, is straight out of a sci-fi novel

The ability to understand someone speaking a foreign language could soon be as easy as wearing a new earpiece.

Waverly Labs is behind an earpiece called the Pilot that is eerily similar in scope to Star Trek’s Universal Translator that allows individuals to translate languages in real-time.

The smart earpiece works by canceling out ambient noise to concentrate on what is being said by a speaker

And then funnels that data to a complementary app that screens it for translation and speech synthesis, according to its website.

Pilot isn’t the first — and likely won’t be the last — piece of tech made for the translation marketplace.

However, where this device really shines is with its instantaneous translation possibilities that remove the sometimes awkward waiting game. Read more

follow @the-future-now

Five NASA Technologies at the 2017 Consumer Electronics Show

This week, we’re attending the International Consumer Electronics Show (CES), where we’re joining industrial pioneers and business leaders from across the globe to showcase our space technology. Since 1967, CES has been the place to be for next-generation innovations to get their marketplace debut.

Our technologies are driving exploration and enabling the agency’s bold new missions to extend the human presence beyond the moon, to an asteroid, to Mars and beyond. Here’s a look at five technologies we’re showing off at #CES2017:

1. IDEAS

Our Integrated Display and Environmental Awareness System (IDEAS) is an interactive optical computer that works for smart glasses. The idea behind IDEAS is to enhance real-time operations by providing augmented reality data to field engineers here on Earth and in space.

This device would allow users to see and modify critical information on a transparent, interactive display without taking their eyes or hands off the work in front of them.

This wearable technology could dramatically improve the user’s situational awareness, thus improving safety and efficiency.

For example, an astronaut could see health data, oxygen levels or even environmental emergencies like “invisible” ethanol fires right on their helmet view pane.

And while the IDEAS prototype is an innovative solution to the challenges of in-space missions, it won’t just benefit astronauts—this technology can be applied to countless fields here on Earth.

2. VERVE

Engineers at our Ames Research Center are developing robots to work as teammates with humans.

They created a user interface called the Visual Environment for Remote Virtual Exploration (VERVE) that allows researchers to see from a robot’s perspective.

Using VERVE, astronauts on the International Space Station remotely operated the K10 rover—designed to act as a scout during NASA missions to survey terrain and collect science data to help human explorers.

This week, Nissan announced that a version of our VERVE was modified for its Seamless Autonomous Mobility (SAM), a platform for the integration of autonomous vehicles into our society. For more on this partnership: https://www.nasa.gov/ames/nisv-podcast-Terry-Fong

3. OnSight

Did you know that we are leveraging technology from virtual and augmented reality apps to help scientists study Mars and to help astronauts in space?

The Ops Lab at our Jet Propulsion Laboratory is at the forefront of deploying these groundbreaking applications to multiple missions.

One project we’re demonstrating at CES, is how our OnSight tool—a mixed reality application developed for the Microsoft HoloLens—enables scientists to “work on Mars” together from their offices.

Supported by the Mars 2020 and Curiosity missions, it is currently in use by a pilot group of scientists for rover operations. Another HoloLens project is being used aboard the International Space Station to empower the crew with assistance when and where they need it.

At CES, we’re also using the Oculus Rift virtual reality platform to provide a tour from the launchpad at our Kennedy Space Center of our Space Launch System (SLS). SLS will be the world’s most powerful rocket and will launch astronauts in the Orion Spacecraft on missions to an asteroid and eventually to Mars. Engineers continue to make progress aimed toward delivering the first SLS rocket to Kennedy in 2018.

4. PUFFER

The Pop-Up Flat Folding Explorer Robot, PUFFER, is an origami-inspired robotic technology prototype that folds into the size of a smartphone.

It is a low-volume, low-cost enhancement whose compact design means that many little robots could be packed in to a larger “parent” spacecraft to be deployed on a planet’s surface to increase surface mobility. It’s like a Mars rover Mini-Me!

5. ROV-E

Our Remote Operated Vehicle for Education, or ROV-E, is a six-wheeled rover modeled after our Curiosity and the future Mars 2020 Rover.

It uses off-the-shelf, easily programmable computers and 3D-printed parts. ROV-E has four modes, including user-controlled driving to sensor-based hazard-avoidance and “follow me” modes. ROV-E can answer questions about Mars and follow voice commands.

ROV-E was developed by a team of interns and young, up-and-coming professionals at NASA’s Jet Propulsion Laboratory who wanted to build a Mars rover from scratch to help introduce students and the public to Science, Technology, Engineering & Mathematics (STEM) careers, planetary science and our Journey to Mars.

Make sure to follow us on Tumblr for your regular dose of space: http://nasa.tumblr.com

This indoor smart garden helps you grow basil and lettuce with zero effort

Plus it looks like part of the decor!

Photography by Amelia Holowaty Krales for The Verge

Prosthetic electrodes will return amputees’ sense of touch

For all the functionality and freedom that modern prosthetics provide, they still cannot give their users a sense of what they’re touching. That may soon change thanks to an innovative electrode capable of connecting a prosthetic arm’s robotic sense of touch to the human nervous system that it’s attached to. It reportedly allows its users to feel heat, cold and pressure by stimulating the ulnar and median nerves of the upper arm.

[x]

Color can convey a mood or elicit a particular emotion and, in terms of web design, color can influence attitudes, perceptions, and behaviors. However, many websites demonstrate inaccessible color choices. Numerous online color palette design tools only focus on assisting designers with either the aesthetics or accessibility of colors.

This paper in ACM TACCESS presents the Accessible Color Evaluator (ACE, daprlab.com/ace) which enhances web developers’ and designers’ ability to balance aesthetic and accessibility constraints.

Courtesy: ACM

If you’re looking for a college major that gives you an incredible job outlook, we have two words for you: computer science.

If you could create a digital version of yourself to stick around long after you've died, would you want to?

Artificial Intelligence (AI) is hot. One breathless press release predicted that by 2025, 95% of all customer interactions will be powered by AI.

via artificial intelligence - Google News

Report: Samsung to unveil massive Galaxy S8 handset - Read more on http://ift.tt/2iSrTLg